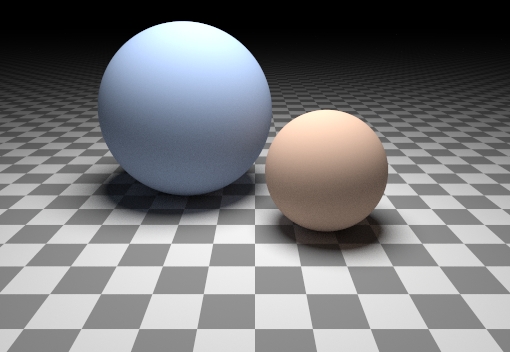

Path Tracing

A few of us at work have been having a friendly path-tracing competition (greets to Tom & Dom). It's been a lot of fun and comparing images in the office each Monday morning is a great motivation to get features in and renders out. I thought I'd write a post about it to record my progress and gather links to some reference material.

Here's a list of features I've implemented so far and some pics below:

- Monte-Carlo path tracing with explicit area light sampling at each step

- Stratified image sampling

- Importance sampled Lambert and Blinn BRDFs

- Sphere, Plane, Disc, Metaball and Distance Field primitives (no triangles yet)

- Multi-threaded tile renderer

- Cross-compiles for PS3 on Linux (runs on SPUs)

- Quite general shade-trees with Perlin noise etc

- Sphere tracing: a geometric method for the antialiased ray tracing of implicit surfaces

- A Lipschitz Method for Accelerated Volume Rendering

And for global illumination and path-tracing in general:

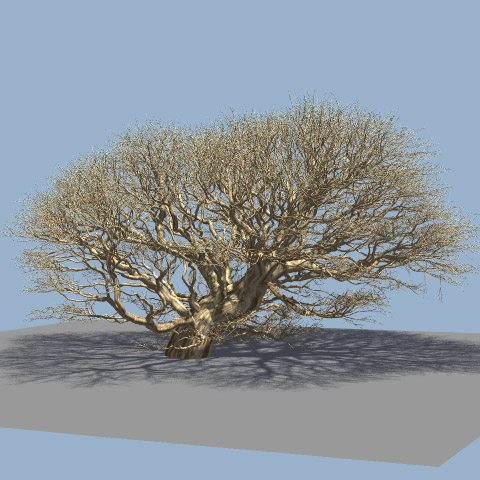

Trees (the green kind)

I've always had an interest in computer generated plants so I was pleased to read Self-organising tree models for image synthesis from Siggraph this year.

The paper basically pulls together a bunch of techniques that have been around for a while and uses them to generate some really good looking tree models.

Seeing as I've had a bit of time on my hands between Batman and before I start at LucasArts I decided to put together an implementation in OpenGL (being a games programmer I want realtime feedback).

Some screenshots below and a Win32 executable available - Plant.zip

Some Notes:

I implemented both the space colonisation and shadow propagation methods. The space colonisation is nice in that you can draw where the plant should grow by placing space samples with the mouse, this allows some pretty funky topiary but I found it difficult to grow convincing real-world plants with this method. The demo only uses the shadow propagation method.

Creating the branch geometry from generalised cylinders requires generating a continuous coordinate frame along a curve without any twists or knots. I used a parallel transport frame for this which worked out really nicely, these two papers describe the technique and the problem:

Parallel Transport Approach to Curve Framing

Quaternion Gauss Maps and Optimal Framings of Curves and Surfaces (1998)

Getting the lighting and leaf materials to look vaguely realistic took quite a lot of tweaking and I'm not totally happy with it. Until I implemented self-shadowing on the trunk and leaves it looked very weird. Also you need to account for the transmission you get through the leaves when looking toward the light:

There is a nice article in GPU Gems 3 on how SpeedTree do this.

The leaves are normal mapped with a simple Phong specular, I messed about with various modified diffuse models like half-Lambert but eventually just went with standard Lambert. It would be interesting to use a more sophisticated ambient term.

Still a lot of scope for performance optimisation, the leaves are alpha-tested right now so it's doing loads of redundant fragment shader work (something like Emil Persson's particle trimmer would be useful here).

If you want to take a look at the source code drop me an email.

Known issues:

On my NVIDIA card when the vert count is > 10^6 it runs like a dog, I need to break it up into smaller vertex buffers.

Some ATI mobile drivers don't like the variable number of shadow mapping samples. If that's your card then I recommend hacking the shaders to disable it.

Atomic float+

No hardware I know of has atomic floating point operations but here's a handy little code snippet from Matt Pharr over on the PBRT mailing list which emulates the same functionality using an atomic compare and swap:

inline float AtomicAdd(volatile float *val, float delta) {

union bits { float f; int32_t i; };

bits oldVal, newVal;

do {

oldVal.f = *val;

newVal.f = oldVal.f + delta;

} while (AtomicCompareAndSwap(*((AtomicInt32 *)val),

newVal.i, oldVal.i) != oldVal.i);

return newVal.f;

}

In unrelated news, I've taken a job at LucasArts which I'll be starting soon, sad to say goodbye to Rocksteady they're a great company to work for and I'll miss the team there.

Looking forward to San Francisco though, 12 hours closer to my home town (Auckland, New Zealand) and maybe now I can finally get along to Siggraph or GDC. If anyone has some advice on where to live there please let me know!

Also a few weeks in between jobs so hopefully time to write some code and finish off all the tourist activities we never got around to in London.

Fin!

Batman: Arkham Asylum is finished and the demo is up on PSN and Xbox Live. I was pretty much responsible for the PS3 version on the engineering side so anything wrong with it is ultimately my fault. I think most PS3 engineers working on a cross platform title will tell you that there is always some apprehension of the 'side by side comparisons' which are so popular these days. This one popped up pretty quickly after the demo was released:

http://www.eurogamer.net/articles/digitalfoundry-batman-demo-showdown-blog-entry

The article is quite accurate (unlike some of the comments) and it was generally very positive which is great to see as we put a lot of effort into getting parity between the two console versions.

The game has been getting a good reception which is especially nice given that Batman games have a long tradition of being terrible.

Tim Sweeney's HPG talk

This link was going round our office, a discussion over at Lambda the Ultimate regarding Tim Sweeney's HPG talk.

http://lambda-the-ultimate.org/node/3560

Tim chimes in a bit further down in the comments.

Handy hints for Bovine occlusion

Code517E recently reminded me of a site I've used before when looking up form factors for various geometric configurations.

One I had missed the first time though is the differential element on ceiling, floor or wall to cow.

http://www.me.utexas.edu/~howell/sectionb/B-68.html

Very handy if you're writing a farmyard simulator I'm sure.

Particle lighting

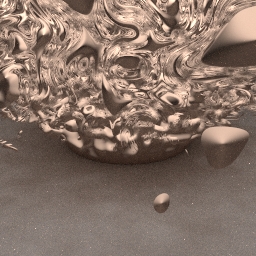

I put together an implementation of the particle shadowing technique NVIDIA showed off a while ago. My original intention was to do a survey of particle lighting techniques, in the end I just tried out two different methods that I thought sounded promising.

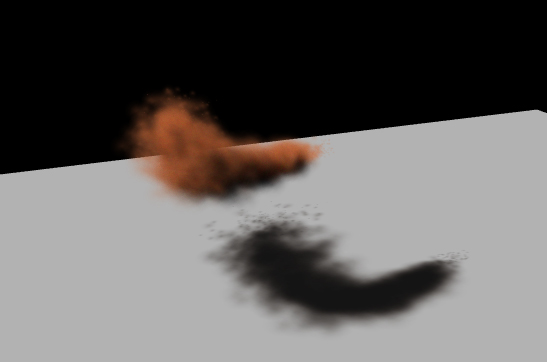

The first was the one ATI used in the Ruby White Out demo, the best take away from it is that they write out the min distance, max distance and density in one pass. You can do this by setting your RGB blend mode to GL_MIN, your alpha blend mode to GL_ADD and writing out r=z, g=1-z, b=0, a=density for each particle (you can reconstruct the max depth from min(1-z), think of it as the minimum distance from an end point). Here's a screen:

The technique needs a bit of fudging to look OK. Blur the depths, add some smoothing functions, it only works for mostly convex objects, good for amorphous blobs (clouds maybe). Performance wise it is probably the best candidate for current-gen consoles.

http://ati.amd.com/developer/gdc/2007/ArtAndTechnologyOfWhiteout(Siggraph07).pdf

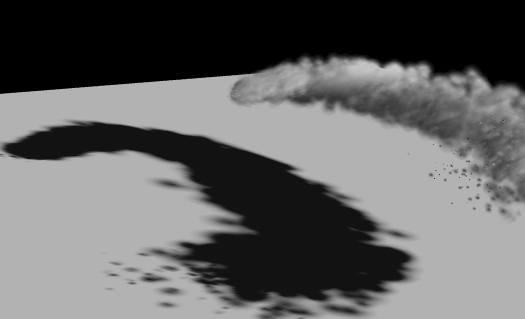

IMO the NVIDIA technique is much nicer visually, it gives you fairly accurate self shadowing which looks great but is considerably more expensive. I won't go into the implementation details too much as the paper does a pretty good job at describing it.

http://developer.download.nvidia.com/compute/cuda/sdk/website/projects/smokeParticles/doc/smokeParticles.pdf

The Nvidia demo uses 32k particles and 32 slices but you can get pretty decent results with much less. Here's a pic of my implementation, this is running on my trusty 7600 with 1000 particles and 10 slices through the volume:

Unfortunately you need quite a lot of quite transparent particles otherwise there are noticeable artifacts as particles change order and end up in different slices. You can improve this by using a non-linear distribution of slices so that you use more slices up front (which works nicely because the extinction for light in participating media is exponential).

Looking forward to tackling some surface shaders next.

Code charity

A friend just sent me this:

It's a non-profit organisation with the goal of developing educational games for developing countries that run on 8bit NES hardware. The old Nintedo chips are now patent-free and clones are very common:

They're trying to recruit programmers with a social conscience, I'm not old-school enough to know 8bit assembly but then I wouldn't mind learning.. who needs GPUs anyway!

Red balls

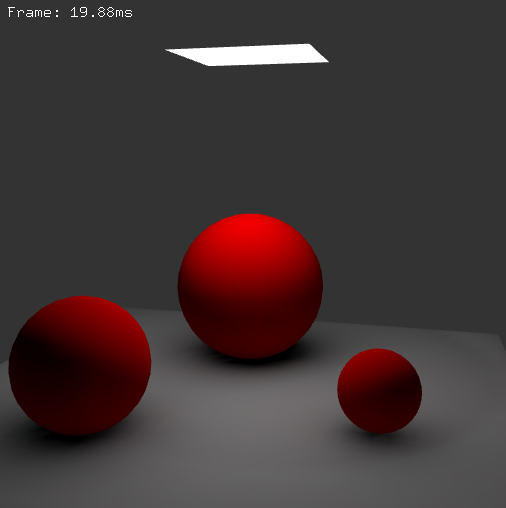

A small update on my global illumination renderer, I've ported the radiance transfer to the GPU. It was fairly straight forward as my CPU tree structure was already set up for easy GPU traversal, basically just a matter of converting array offsets into texture coordinates and packing into an indices texture.

The hardest part is of course wrangling OpenGL to do what you want and give you a proper error message. This site is easily the best starting point I found for GPGPU stuff:

http://www.mathematik.uni-dortmund.de/~goeddeke/gpgpu/tutorial.html

So here's an image, there are 7850 surfels, it runs about 20ms on my old school NVidia 7600, so it's still at least an order of magnitude or two slower than you would need for typical game scenes. But besides that it's fun to pull area lights around in real time.

Not as much colour bleeding as you might expect, there is some but it is subtle.

Tree traversals

I changed my surfel renderer over to use a pre-order traversal layout for the nodes, this generally gives better cache utilisation and I did see a small speed up from using it. The layout is quite nice because to traverse your tree you just linearly iterate over your array and whenever you find a subtree you want to skip you just increment your node pointer by the size of that subtree (which is precomputed, see Real Time Collision Detection 6.6.2).

The best optimisation though comes from compacting the size of the surfel data, which again improves the cache performance. As some parts of the traversal don't need all of the surfel data it seems to make sense to split things out, for instance to store the hierarchy information and the area seperately from the colour/irradiance information.

In fact it seems like when generalised, this idea leads you to the structure of arrays (SOA) layout, which essentially provides the finest grained breakdown where you only pull into the cache what you use and for all the nodes that you skip over there is no added cost.

I haven't done any timings to see how much of a win this would actually be, mainly because dealing with SOA data is so damn cumbersome.

It definitely seems like something you should do after you've done all your hierarchy building and node shuffling which is just so much more intuitive with structures. Then you can just 'bake' it down to SOA format and throw it at the GPU/SIMD.