PBRT

I just bought a copy of Physically Based Rendering, I've been meaning to get one for ages as it's often recommended and I thought it might be useful given my recent interest in global illumination. I'm also hoping to get a more formal background in rendering rather than the hacktastic world of real time.

The subjects covered are broad and it's very readable. It's my first exposure to literate programming, where essentially the book describes and contains the full implementation of a program. In fact the source code for their renderer is generated (tangled) from the definition of the book before compilation.

The only problem is the amount it weighs, I like to read on the tube but 1000 page hard backs aren't exactly light reading.

(Almost) realtime GI

After my initial implementation of surfel based illumination I've extended it to do hierarchical clustering of surfels based on a similar idea to the one presented in GPU Gems 2.

A few differences:

I'm using a k-means clustering to build the approximation hierarchy bottom up. A couple of iterations of Lloyd's algorithm provides pretty good results. Really you could get away with one iteration.

To seed the clustering I'm simply selecting every n'th surfel from the source input. At first I thought I should be choosing a random spatial distribution but it turns out clustering based on regular intervals in the mesh works well. This is because you will end up with more detail in highly tesselated places, which is what you want (assuming your input has been cleaned).

For example, a two tri wall will be clustered into one larger surfel where as a highly tesselated sphere will be broken into more clusters.

The error metric I used to do the clustering is this:

Error = (1.0f + (1.0f-Dot(surfel.normal, cluster.normal)) * Length(surfel.pos-cluster.pos)

So it's a combination of how aligned the surfel and cluster are and how far away they are from each other. You can experiment with weighting each of those metrics individually but just summing seems to give good results.

When summing up the surfels to form your representative cluster surfel you want to:

a) sum the area

b) average the position, normal, emission and albedo weighted by area

Weighting by area is quite important and necessary for the emissive value or you'll basically be adding energy into the simulation.

Then you get to the traversal, Bunnel recommended skipping a sub-tree of surfels if the distance to the query point is > 4*radius of the cluster surfel. That gives results practically identical to the brute force algorithm and I think you can be more agressive without losing much quality at all.

I get between a 4-6x speed up using the hierarchy over brute force. Not quite realtime yet but I haven't optimised the tree structure at all, I'm also not running it on the GPU :)

It seems like every post I make here has to reference Christer Ericson somehow but I really recommend his book for ideas about optimising bounding volumes. Loads of good stuff in there that I have yet to implement.

Links:

Real-time Soft Shadows in Dynamic Scenes using Spherical Harmonic Exponentiation

Lightcuts: a scalable approach to illumination

Indirect illumination

It's been a while since I checked in on the state of the art in global illumination but there is some seriously cool research happening at the moment.

I liked the basic idea Dreamworks used on Shrek2 (An Approximate Global Illumination System for Computer Generated Films) which stores direct illumination in light maps and then runs a final gather pass on that to calculate one bounce of indirect. It might be possible to adapt this to real-time if you could pre-compute and store the sample coordinates for each point..

However the current state of the art seems to be the point based approach that was first presented by Michael Bunnell with his GPU Gems 2 article Dynamic Ambient Occlusion and Indirect Lighting, he approximates the mesh as a set of oriented discs and computes the radiance transfer dynamically on the GPU.

Turns out Pixar took this idea and now use it on all their movies, Pirates of Carribean, Wall-E, etc. The technique is described here in Point Based Approximate Color Bleeding. Bunnell's original algorithm was O(N^2) in the number of surfels but he used an clustered hierarchy to get that down to O(N.log(N)), Pixar use an Octree which stores a more accurate spherical harmonic approximation at each node.

What's really interesting is how far Bunnell has pushed this idea, if you read through Fantasy Lab's recent patent (August 2008), there are some really nice ideas in there that I haven't seen published anywhere.

Here's a summary of what's new since the GPU Gems 2 article:

- Fixed the slightly cumbersome multiple shadow passes by summing 'negative illumination' from back-facing surfels

- Takes advantage of temporal coherence by simply iterating the illumination map each frame

- Added some directional information by subdividing the hemisphere into quadrants

- Threw in some nice subdivision surfaces stuff in at the end

Anyway, I knocked up a prototype of the indirect illumination technique and it seems to work quite well. The patent leaves out loads of information (and spends two pages describing what a GPU is), but it's not too difficult to work out the details (note the form factor calculation is particularly simplified).

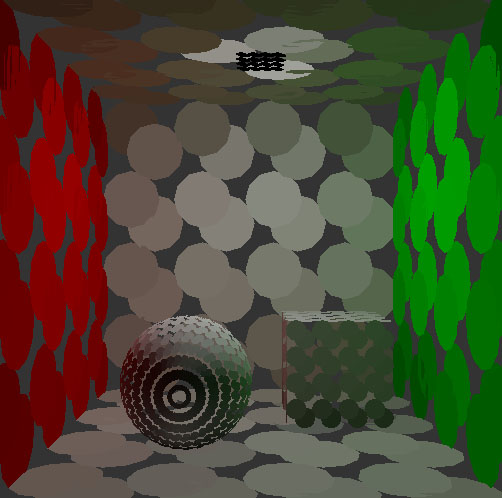

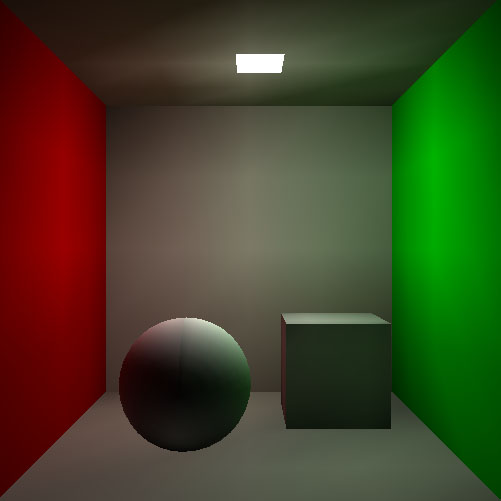

Here are the results from a very low resolution mesh, in reality you would prime your surfels with direct illumination calculated in the traditional way with shadow mapping / shaders then let the sim bounce it round but in this case I've done the direct lighting using his method as well.

Disclaimer: some artifacts are visible here due to the way illumination is baked back to the mesh and there aren't really enough surfels to capture all the fine detail but it's quite promising.

This seems like a perfect job for CUDA or Larrabee as the whole algorithm can be run in parallel. You can do it purely through DirectX or OpenGL but it's kind've nasty.

Branch free Clamp()

One of my work mates had some code with a lot of floating point clamps in it the other day so I wrote this little branch free version using the PS3's floating point select intrinsic:

float Clamp(float x, float lower, float upper)

{

float t = __fsels(x-lower, x, lower);

return __fsels(t-upper, upper, t);

}

__fsels basically does this:

float __fsels(float x, float a, float b)

{

return (x >= 0.0f) ? a : b

}

I measured it to be 8% faster than a standard implementation, not a whole lot but quite fun to write. The SPUs have quite general selection functionality which is more useful, some stuff about it here:

http://realtimecollisiondetection.net/blog/?p=90

(Not sure about this free Wordpress code formatting, I may have to move it to my own host soon)

Two threads, one cache line

An interesting thread going around the GDA mailing list at the moment about multithreaded programming reminded me of a little test app I wrote a while back to measure the cost of two threads accessing memory on the same cache line.

The program basically creates two threads which increment a variable a large number of times measuring the time it takes to complete with different distances between the write addresses. Something like this:

__declspec (align (512)) volatile int B[128];

DWORD WINAPI ThreadProc(LPVOID param)

{

// read/write the address a whole lot

for (int i=0; i < 10000000; ++i)

{

(*(volatile int*)param)++;

}

return 0;

}

int main()

{

volatile int* d1 = &B[0];

volatile int* d2 = &B[127];

while (d1 != d2)

{

HANDLE threads[2];

// QPC wrapper

double start = GetSeconds();

threads[0] = CreateThread(NULL, 0, ThreadProc, (void*)d1, 0, NULL);

threads[1] = CreateThread(NULL, 0, ThreadProc, (void*)d2, 0, NULL);

WaitForMultipleObjects(2, threads, TRUE, INFINITE);

double end = GetSeconds();

--d2;

cout << (d2-d1) * sizeof(int) << "bytes apart: " << (end-start)*1000.0f << "ms" << endl;

}

int i;

cin >> i;

return 0;

}

On my old P4 with a 64 byte cache line these are the results:

128bytes apart: 17.4153ms

124bytes apart: 17.878ms

120bytes apart: 17.4028ms

116bytes apart: 17.3625ms

112bytes apart: 17.959ms

108bytes apart: 18.0241ms

104bytes apart: 17.2938ms

100bytes apart: 17.6643ms

96bytes apart: 17.5377ms

92bytes apart: 19.3156ms

88bytes apart: 17.2013ms

84bytes apart: 17.9361ms

80bytes apart: 17.1321ms

76bytes apart: 17.5997ms

72bytes apart: 17.4634ms

68bytes apart: 17.6562ms

64bytes apart: 17.4704ms

60bytes apart: 17.9947ms

56bytes apart: 149.759ms ***

52bytes apart: 151.64ms

48bytes apart: 150.132ms

44bytes apart: 125.318ms

40bytes apart: 160.33ms

36bytes apart: 147.889ms

32bytes apart: 152.42ms

28bytes apart: 157.003ms

24bytes apart: 149.552ms

20bytes apart: 142.372ms

16bytes apart: 136.908ms

12bytes apart: 145.691ms

8bytes apart: 146.768ms

4bytes apart: 128.408ms

0bytes apart: 125.655ms

You can see when it gets to 56 bytes there is a large penalty (9x slower!) as it brings the cache-coherency protocol into play which forces the processor to reload from main memory.

Actually it turns out this is called "false sharing" and it's quite well known, the common solution is to pad your shared data to be at least one cache line apart.

Refs:

http://software.intel.com/en-us/articles/reduce-false-sharing-in-net/

http://www.ddj.com/embedded/196902836

More Metaballs

So after running my Metaballs demo on my girlfriends laptop it appears to be GPU limited due to fillrate. This is mainly due to the overkill number of particles in my test setup and the fact they hang round for so long, but the technique is fillrate heavy so it might be a problem.

It'd be nice to do a multithreaded CPU implementation to see how that compares, but the advantage of the current method is that it keeps the CPU free to do other things.

You could probably get more performance in some cases by uploading all the metaballs as shader parameters (or as a texture) and evaluating them directly in the pixel shader. Also I just realised I could probably render the 'density' texture at a lower resolution for a cheap speedup.

GPU Metaballs

I've been meaning to implement this idea for ages, it's a GPU implementation of 2D metaballs. It's very simple, very fast and doesn't even require hardware shader support. Seems like the kind of effect that could be useful in some little game..

It's quite fun to play with (use left drag to rotate the emitter), so I might try and fancy it up with some better graphics and collision.

The demo is below, I'll probably release source at some stage but at the moment it's all tied up in my dev framework which needs to be cleaned up (the exe is also larger than it should be as I have all sorts of crap linked in).

The simulation is just done using my simple particle system but it would be fun to implement smoothed particle hydrodynamics..

Sprite mipmaps

Information on how to correctly make sprite textures is just not on the web anywhere. There are so many subtle problems with making transparent textures for use in a 3D engine, here are some of the things I learned recently from my hobby project:

Generally the easiest way is to paint on a transparent background, that is, don't try and paint the alpha channel yourself. That's because it's a complete nightmare trying to match up your rgb and alpha channels correctly. This is one of the reasons why you see loads of games with dark 'halo's around their particle textures (although it's not the only reason). Unless you want to get really tricky and try and paint your own pre-multiplied texture it's not worth it, just create a new image in Photoshop with a transparent background and export as PNG.

Note: the Photoshop Targa exporter won't export the alpha channel properly from a transparent image, it only supports alpha if you explicitly add the alpha channel to an RGB image and paint it yourself. This is a slight pain because I've always favoured Targa as an image file format that's easy to read / write but in this case Photoshop drops the ball once again.

OK now you have your image exported, the image is currently NOT pre-multiplied, it may look like it in Photoshop and most image viewers but on disc it is not pre-multiplied.

Read the file into ram using libPNG or your PNG library of choice.

Generate mip maps... this is where it gets interesting. If you just call something like gluBuild2DMipmaps() your texture will be wrong, the lower level mipmaps will have a dark halo around the edges. Now what most artists do in this case is convert it to a non-transparent image and start painting in some bright colour around the edges of their sprite in the RGB channels, this is the equivalent of a really nasty filthy hack from which no good can come. You can never paint just the right colour in there and can never get it in just the right place, if you're having this problem go talk to your coders and get them to implement a better texture import pipeline (see below):

The fix is really quite simple and you should probably have already guessed that it is to use pre-multiplied alpha. If you haven't heard of it before read here:

http://keithp.com/~keithp/porterduff/

Although it's not mentioned on those sites pre-multiplication is also the solution to building mipmaps correctly. Let's imagine you don't pre-multiply before you downscale to build your mipmap, you have four source RGBA texels from the higher level:

t1, t2, t3, t4In some kind of box filter arrangement, then your destination pixel in the next lower mip is computed like this:

lowermip.rgb = (t1.rgb + t2.rgb + t3.rgb + t4.rgb) / 4 lowermip.a = (t1.a + t2.a + t3.a + t4.a) /4

And when you come to render with your blend function set to GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA you get:

framebuffer.rgb = lowermip.rgb * lowermip.a + framebuffer.rgb * (1.0-lowermip.a)

It should be farily obvious why this doesn't work, the filtered RGB channel will contain information from neighbouring pixels even if those pixels have a zero alpha. As the background in most exported source textures is black it's the equivalent of 'pulling in' black to your lower mip rgb channel.

The very simple solution is to use pre-multiplication which makes your lower mip calculation like this:

lowermip.rgb = (t1.rgb*t1.a + t2.rgb*t2.a + t3.rgb*t3.a + t4.rgb*t4.a) / 4; lowermip.a = (t1.a + t2.a + t3.a + t4.a) / 4;

So now you're weighting each pixels contribution to your lower mip by the appropriate alpha value. And when you render you set your blend modes to GL_ONE, GL_INV_SRC_ALPHA. Not only do your mips work correctly you can now 'mix' additive and subtractive blending which is great for fire turning into smoke for instance (see references below).

You don't need to export your image pre-multiplied, I fix mine up at import / asset cooking time which is probably preferable so you can keep working on the PNG source asset in a convenient way.

This is really just the basics and mip-map filtering has had a lot of other good stuff written about it:

http://number-none.com/product/Mipmapping,%20Part%201/index.html

http://number-none.com/product/Mipmapping,%20Part%202/index.html

Also see http://code.google.com/p/nvidia-texture-tools/ for some image manipulation source code (the Nvidia Photoshop DDS plugin also has an option to modulate your image by alpha when generating mip-maps).

This is a great little paper about soft-edged particles and pre-multiplied alpha (even though it's not referred too as such)

Soft Edges and Burning Things: Enhanced Real-Time Rendering of Particle Systems

iPhone GL specs

So I've been trying to find out a bit more about the iPhone's capabilities, this site has some good details. No shaders, roughly enough power for 15000 lit triangles @ 30hz. Not too shabby, I wonder about the power consumption and if certain hardware paths drain the battery faster than others. It would be nice to make something that people can play for more than 3 hours.

So the word on the street is that O2 are bringing out a pre-pay iPhone in December, I'm currently debating whether to wait for that or just get an iPod touch which you can still develop for using pretty much the same feature set.

Parallelisation and cups of tea

While I was at Sony I spent a lot of time thinking about making tasks run concurrently and how they should be scheduled to maximise performance.

Recently this kind of analysis has been spilling over into my everyday life and you start seeing ways you could parallelise all sorts of tasks. Of course this is just common sense and something we all do naturally do different degrees.

A simple example is making a cup of tea, you don't want to get the tea bags and the cups first, no, that would be a waste of precious milliseconds. First you switch on the jug and then get to work on the rest of the process in parallel. I imagine anyone who has worked in a kitchen before would be experts at this kind of scheduling and could probably do my job better than I can.